How Light, Lens, and Logic Reveal the Hidden Worlds Within and Without

Table of Contents

- Introduction – Seeing Through Two Lenses

Photography and science: twin pursuits of revelation and clarity - A Brief History of Photography as Science

From chemistry and optics to image intelligence - The Evolution of Imaging: Beyond the Human Eye

X-rays, MRIs, satellites, and microscopes: the expansion of visual capability - The Tools of Vision: Cameras, Sensors, and Algorithms

The physics and technology behind modern photographic systems - Photographic Intelligence: IMINT, AI, and Data-Driven Imagery

How photography supports intelligence, research, and decision-making - Types of Photography and What They Reveal

Documentary, scientific, artistic, forensic, astronomical, and more - Digital Photography and Computational Imaging

From pixels to post-processing: how computation reshaped light and form - The Science of Seeing: Light, Optics, and Perception

How our brains and devices translate light into meaning - Photography as Scientific Method and Metaphor

Case studies in how images changed our understanding of nature - The Ethical Lens: Truth, Manipulation, and Representation

The responsibility of seeing and showing in a world of deepfakes and filters - The Future of Imaging: Quantum, Hyperspectral, and Beyond

What tomorrow’s lenses will show us—and how we’ll need to prepare - Conclusion – The Light Inside the Lens

Photography not just as observation, but illumination

1. Introduction – Seeing Through Two Lenses

Photography and science: twin pursuits of revelation and clarity

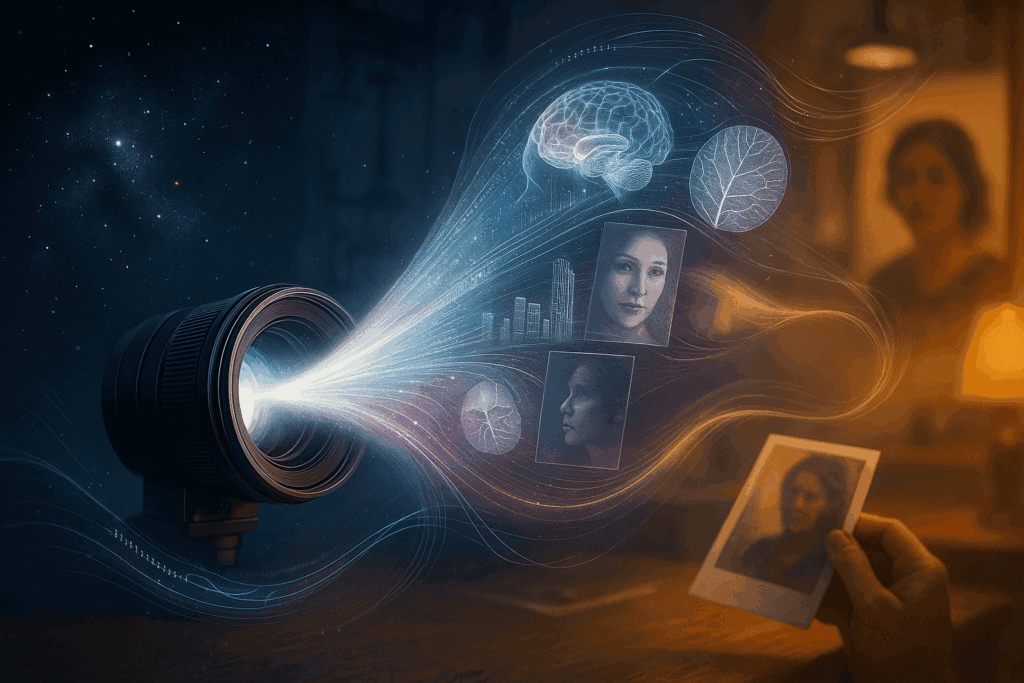

Photography and science have always shared a secret handshake. One seeks to capture the world as it is; the other, to understand it. But the more you study either, the more you realize they are not just allies—they are kin. Both begin with questions: What is there? What does it mean? Can it be seen? And both answer through the same language—light, time, and attention.

To photograph something is to preserve a sliver of the world, to fix a moment that would otherwise dissolve. To study something scientifically is to observe it with method and care, isolating variables and revealing patterns. At their best, each provides a clearer view—not just of the surface of things, but of their structure, behavior, and hidden nature.

The first photographs were chemical miracles. Silver salts, glass plates, and darkrooms gave way to film reels, lenses, and the shutter’s whisper. But photography has always been more than art—it has been a tool of discovery. From early daguerreotypes of the Moon to the microscopic etchings of cellular life, photography helped science see what it previously could only imagine.

Today, we live in an age of astonishing imaging. Satellites gaze across continents. MRI machines slice the body into viewable layers. Quantum cameras flirt with the edges of what can be known. Meanwhile, in the palms of our hands, devices of immense power interpret light in ways that were once the domain of national labs and deep-space observatories.

This article explores the dance between image and inquiry. We’ll trace the history of photography’s scientific roots, walk through the modern methods of imaging—medical, remote, computational—and explore how photography itself has become a science. We’ll also ask a deeper question: in a world where images can be generated by algorithms and altered at will, what does it mean to see truthfully?

This is the story of photography as science—and science as something we now photograph. Light meets lens. Thought meets frame. The result? A revelation.

2. A Brief History of Photography as Science

From chemistry and optics to image intelligence

Long before the camera became a household object, photography was a scientific experiment—a bold alchemy of chemistry, light, and lens. The 19th century saw a string of inventions and accidents that would give rise to one of humanity’s most transformative technologies. It began not with selfies or snapshots, but with glass, silver, and curiosity.

In 1839, Louis Daguerre announced the daguerreotype, a process that used silver-plated copper, iodine vapor, and mercury fumes to produce a unique image. The result was mesmerizing: a permanent ghost of reality, captured by light itself. Around the same time, William Henry Fox Talbot was developing the calotype, which used paper negatives and allowed for multiple prints. These early processes were slow, delicate, and chemically dangerous—but they laid the foundation for a new kind of observation.

Scientists were quick to take notice. In astronomy, cameras began recording lunar craters and solar eclipses with greater precision than the human hand. In biology, microscopic photography opened new dimensions inside cells and tissues. In physics, stroboscopic photography captured motion too fast to see—helping visualize concepts from resonance to the trajectory of a bullet.

By the late 19th and early 20th centuries, photography had become an indispensable tool in nearly every scientific discipline. It documented experiments, tracked celestial bodies, recorded environmental changes, and made the invisible visible. Photographic plates captured early X-rays. Time-lapse sequences showed how flowers bloomed, clouds swirled, and embryos formed. These weren’t just pretty pictures—they were data.

Meanwhile, developments in optics and lens design—such as achromatic lenses and fast shutter mechanisms—gave rise to more accurate and sensitive image-making. The invention of roll film by George Eastman in the 1880s democratized photography, but the scientific world remained focused on control, resolution, and repeatability.

In the Cold War era, a new kind of photography emerged: Image Intelligence, or IMINT. Satellites and high-altitude aircraft like the U-2 used advanced photographic systems to gather geopolitical data. Scientific photography had moved beyond the lab and telescope—it was now a core part of global surveillance and strategy.

Today, digital sensors, artificial intelligence, and multispectral imaging have made the photographic process more technical than ever. Yet the essence remains unchanged: capturing light, fixing a moment, revealing what the eye alone cannot.

Photography began as a scientific achievement—and it continues to serve science in ways Daguerre could never have imagined. What started with mercury fumes and sunlight has become a data-rich language of precision, perception, and pattern recognition.

3. The Evolution of Imaging – Beyond the Human Eye

X-rays, MRIs, satellites, and microscopes: the expansion of visual capability

Human vision is extraordinary—but limited. We see only a narrow band of the electromagnetic spectrum, are constrained by range and resolution, and can only look from one vantage point at a time. Imaging—scientific imaging—was born to overcome these limits. To extend our senses. To see the unseeable.

Imaging, in its scientific sense, refers to the process of creating visual representations of internal or hidden structures. This may mean photographing the inside of a body, mapping the terrain of distant planets, or revealing atomic lattices invisible to the naked eye. Where photography preserves surfaces, imaging reveals what lies beneath.

In medicine, this began with the groundbreaking discovery of X-rays by Wilhelm Conrad Röntgen in 1895. Suddenly, bones, tumors, and metal fragments could be seen without surgery. Within a decade, X-ray machines were revolutionizing diagnostics. In the 20th century, this expanded to include CT scans, which take multiple X-ray images from different angles to create cross-sectional views; MRI, which uses magnetic fields and radio waves to map soft tissue in exquisite detail; and ultrasound, which turns sound waves into fetal faces and beating hearts.

Meanwhile, in the realm of microscopy, imaging reached downward—far beneath the threshold of vision. Optical microscopes gave way to electron microscopes, revealing viruses, organelles, and crystalline structures with nanometer precision. Scanning tunneling microscopes made it possible to visualize individual atoms. Biology and materials science would never look the same.

In the other direction, imaging also reached outward. Remote sensing, the practice of collecting data about Earth from above, began with balloons and progressed to satellites armed with radar, infrared, and multispectral cameras. These tools don’t just take pretty pictures of Earth—they monitor climate change, detect natural disasters, track deforestation, and measure urban growth.

In astrophysics, imaging has taken us even farther. Radio telescopes translate invisible frequencies into visual maps of galaxies. The James Webb Space Telescope peers back in time, imaging ancient starlight from billions of years ago. Here, photography isn’t just technical—it’s time travel.

Even mental imaging—the creation of internal pictures in the mind—has become a frontier of neuroscience. Functional MRI scans track blood flow in the brain, showing how thought, memory, and imagination light up specific regions. In some labs, researchers are developing technologies that may one day reconstruct mental images directly from neural signals.

From bones to galaxies, from atoms to thoughts, imaging has transformed science’s capacity to understand the world. It does what photography alone cannot: it makes the hidden visible, the fleeting measurable, and the distant knowable.

4. The Tools of Vision – Cameras, Sensors, and Algorithms

The physics and technology behind modern photographic systems

Behind every image—whether a museum-quality photograph, a diagnostic scan, or a satellite view—lies a quiet harmony of physics, chemistry, and computation. The camera, once a wooden box and a silvered plate, has become a matrix of precision components, each calibrated to master light.

At the heart of every camera is the lens, a sculpted piece of glass or plastic that bends light to form an image. Lenses come in many varieties—wide-angle, telephoto, macro—but all work by directing photons toward a focal plane. In high-end scientific imaging, lenses are often custom-engineered for minimal distortion, maximum clarity, and compatibility with non-visible wavelengths like ultraviolet or infrared.

The lens focuses light onto a sensor—today, typically a CMOS (Complementary Metal-Oxide-Semiconductor) or CCD (Charge-Coupled Device) sensor. These chips contain millions of tiny light-sensitive units called photosites, which convert incoming photons into electrical signals. Unlike film, which captured images chemically, sensors convert them electronically, enabling near-instantaneous capture and infinite duplication.

Sensor sensitivity is measured in ISO, and dynamic range determines how well it captures both shadows and highlights. Resolution, measured in megapixels, matters—but so does the quality of those pixels. In scientific cameras, bit depth (how many levels of brightness can be recorded) is often more important than pixel count.

From here, the image becomes data—a stream of electrical information interpreted and refined by an image processor. In a smartphone, this processor may instantly apply automatic adjustments: sharpening edges, boosting colors, reducing noise. In a scientific context, raw data is usually preserved for careful analysis. The image processor is guided by algorithms, many of them powered today by machine learning models trained to enhance detail, detect patterns, or eliminate noise.

For imaging beyond the visible spectrum—such as thermal, hyperspectral, or X-ray photography—specialized sensors replace traditional silicon chips. These include InGaAs sensors for infrared, bolometers for thermal radiation, and photomultiplier tubes for extremely low light. Each sensor type is tuned to respond to specific physical properties, translating them into visible imagery.

Some systems are mobile: drones, robotic endoscopes, wearable bodycams. Others are massive: ground-based telescopes with adaptive optics, synchrotron radiation facilities used for imaging molecular structures. Increasingly, they’re also intelligent—powered by software that doesn’t just capture images, but understands them.

This convergence of hardware and software has redefined what it means to photograph. We are no longer just pointing and shooting. We are assembling light, filtering data, modeling behavior, and refining vision—across scales, media, and environments.

The camera is no longer a box. It is a system—a tool of seeing and knowing.

5. Photographic Intelligence – IMINT, AI, and Data-Driven Imagery

How photography supports intelligence, research, and decision-making

Photography doesn’t merely document reality—it informs decisions. This is the domain of photographic intelligence, where images are more than pictures. They are evidence. Coordinates. Clues. In the military, in science, and increasingly in daily life, photography has become a source of actionable knowledge.

One of the most developed forms of this is IMINT—Imagery Intelligence. A cornerstone of military and surveillance strategy since the mid-20th century, IMINT uses photographic technologies to gather visual information about activities, environments, and targets. It began with cameras mounted on planes and balloons. Then came satellites like the American CORONA program, which returned physical film from orbit—parachuting it back to Earth for analysis.

Today, high-resolution satellites and UAVs (unmanned aerial vehicles) continuously collect images of cities, forests, oceans, and warzones. AI-assisted systems sift through this imagery to detect troop movements, illegal mining, flood zones, or emerging wildfires—often in real time.

Outside of defense, this kind of visual analysis is transforming industries. In agriculture, drone and satellite imaging helps monitor crop health, water usage, and pest activity. In urban planning, aerial imaging tracks population density and infrastructure needs. In environmental science, long-term satellite photography reveals changes in ice caps, coral reefs, and deforestation patterns.

At the individual level, machine learning models can now analyze images to recognize faces, interpret emotions, or scan retail shelves. In medicine, AI-enhanced imaging systems detect tumors with precision approaching—or sometimes exceeding—human experts. In law enforcement and journalism, image metadata is used to verify authenticity and trace origins.

The term “photographic intelligence” also extends into data visualization, where complex datasets are turned into visual representations: heat maps, topographic models, or microscopic scans with fluorescent labeling. These are not just illustrations—they’re tools for interpretation and pattern recognition.

This blending of photography and analytics—vision and calculation—marks a shift in how we use images. Where photography once meant freezing a moment, it now means generating insight. The image is not the end product; it’s a doorway into further computation, prediction, and action.

But this power comes with responsibility. As our capacity to “see” expands—across space, time, and even probability—so does the importance of how those images are used, interpreted, and preserved. Image intelligence, like all intelligence, must be guided by wisdom.

6. Types of Photography and What They Reveal

Documentary, scientific, artistic, forensic, astronomical, and more

Photography wears many masks. One moment it is art, the next it is evidence. In some hands it is a mirror of the world; in others, a scalpel. The diversity of photographic practice is not a splintering, but a deepening—each type asking different questions of light, time, and truth.

Documentary Photography

At its core, documentary photography seeks to tell the truth—unfiltered, unposed. From Jacob Riis’s gritty images of New York tenements to Dorothea Lange’s iconic Migrant Mother, this genre captures social realities with the intent to inform, challenge, or bear witness. Scientific documentation follows a similar impulse: capturing processes, changes, or anomalies as they occur, whether in the lab or the field.

Scientific Photography

Here, precision takes precedence over aesthetics. This includes microscopy, astrophotography, time-lapse imaging, and high-speed photography. It’s not about beautiful light—it’s about revealing patterns, structures, and behaviors. Even mundane images, like a thermal scan of a heat exchanger or a spectrum plot, can be powerful in their capacity to illuminate phenomena invisible to the naked eye.

Artistic and Conceptual Photography

On the other end of the spectrum is the photography of expression. Here, the image isn’t a reference, but a metaphor. Artists like Man Ray, Cindy Sherman, and Hiroshi Sugimoto use photography to explore identity, abstraction, and perception. Increasingly, artists are merging scientific tools into their work—scanning DNA, using long-exposure to track stars, or processing images through algorithms.

Forensic Photography

The eye of the investigator is methodical. Forensic photography is used to document crime scenes, injuries, and evidence with legal integrity. Lighting, scale, and angle are rigorously controlled to ensure the images hold up under scrutiny. It is photography with legal consequences—where objectivity must be measurable.

Astronomical and Remote Photography

Looking outward, astrophotography captures phenomena beyond Earth: solar flares, planetary orbits, the swirl of distant galaxies. Remote sensing overlaps here, focusing on Earth from above—using satellite or drone-based systems to study terrain, climate, and vegetation.

Environmental and Wildlife Photography

Bridging science and storytelling, environmental photography documents ecosystems, species, and human impact. Whether it’s a polar bear adrift on melting ice or a detailed macro of a pollinating bee, these images awaken both awareness and empathy.

Consumer Photography

In the age of smartphones and social media, photography has become a daily habit. Billions of people now take photos not for science, art, or commerce—but for memory, identity, and connection. A birthday cake, a sunset, a selfie—these may seem mundane, but they are a democratic revolution in visual culture. Today’s consumer photography is candid, ephemeral, and algorithmically enhanced. Yet it is also a cultural mirror, showing how we live, what we value, and how we see ourselves in the world.

Commercial Photography

Where art meets commerce, commercial photography turns aesthetics into persuasion. From fashion editorials to food styling, product shoots to architectural showcases, the goal here is clarity, appeal, and branding. Scientific precision often overlaps—especially in fields like industrial, aerial, or medical equipment photography, where technical accuracy supports business needs. This genre demands mastery not only of lighting and technique, but of psychology and market insight.

Experimental and Computational Photography

This growing category explores what happens when photography meets code. Light field cameras, generative adversarial networks (GANs), and other algorithmic systems allow for refocusing, 3D modeling, or even creating images from pure data. The image is no longer a window—it’s a simulation.

Each of these types shares a common root: the desire to see more deeply. Whether we call it art, science, journalism, or proof, photography is ultimately a way of asking the world to show itself—and of listening closely to what it reveals.

7. Digital Photography and Computational Imaging

From pixels to post-processing: how computation reshaped light and form

If film photography was chemistry, digital photography is mathematics. What was once a reaction of silver salts in a darkroom is now an array of electrical signals and machine-coded enhancements. With the rise of digital cameras in the early 2000s, and the smartphone explosion that followed, photography became not only portable—but programmable.

At the heart of digital photography is the image sensor, usually a CMOS or CCD chip, which translates light into electrical charges. Each photosite on the sensor records brightness and color information, which is then converted into pixels—a grid of data points that reconstruct the scene. But what happens next is where the true transformation begins.

Computational imaging refers to any photographic method in which software plays a significant role in forming or enhancing the final image. In smartphones, this might mean multi-frame compositing, where the camera takes several exposures in rapid succession and blends them to reduce noise or increase dynamic range. Features like portrait mode, night vision, and HDR are all powered by algorithms—trained, often, on millions of other photos.

Professional systems go even further. In light-field photography, for example, cameras capture the direction and intensity of light rays, allowing users to refocus or shift perspective after the photo has been taken. In tomographic imaging, used in medicine and materials science, computation assembles two-dimensional slices into fully navigable 3D models. Synthetic aperture imaging lets small lenses simulate the resolution of a much larger one, useful in radar and astronomy.

The digital darkroom has also evolved. Software like Adobe Photoshop, Lightroom, and open-source platforms like GIMP allow photographers to manipulate color, contrast, texture, geometry, and even content itself. What once required physical filters and hours in a red-lit room can now be achieved with sliders and masks. But it also raises perennial questions: Where is the line between enhancement and fabrication?

More radically, AI-driven photography is now entering a new realm: image generation. Generative models can create entirely synthetic photographs—of people who don’t exist, places no lens has seen. These tools can hallucinate details from blurred images or infer lighting that never occurred. In scientific visualization, they can simulate missing data or extrapolate patterns from limited inputs.

Yet computation is not the enemy of authenticity. In many cases, it brings out the essence of what was there—revealing textures, lighting subtleties, or fleeting expressions that traditional photography would have lost. The goal is not deception but amplification: of meaning, mood, or scientific insight.

Digital photography, far from ending the photographic tradition, has deepened it. It has moved the craft from a purely optical process to a hybrid of physics, software, and perception. It has turned every shutter click into a collaboration—not just between subject and photographer, but between light and code.

8. The Science of Seeing – Light, Optics, and Perception

How our brains and devices translate light into meaning

To photograph is to play with light. But what is light, really? And what happens—physically, optically, and mentally—when it touches a lens, or an eye?

At its core, light is a wave—and a particle. This dual nature, described by quantum mechanics, means light behaves both like a vibrating field and a stream of energy packets called photons. When light strikes a surface or enters a lens, it can reflect, refract, diffract, or be absorbed. The science of photography depends on controlling these behaviors.

Optics—the study of how light travels and bends—forms the foundation of both human vision and camera design. Lenses, whether in your eye or your camera, refract light to focus it onto a surface: your retina, or a digital sensor. Aperture size controls how much light enters. Focal length affects magnification and depth. Shutter speed determines how long the light is captured. Every photographic choice is, in essence, a manipulation of these physical parameters.

But seeing is more than optics. It’s also perception. In the brain, the signals from the retina are reassembled into the experience of an image. Neuroscientists know this process is not passive. The visual cortex edits, enhances, and sometimes even invents parts of the scene. We fill in blanks. We assign emotional weight. We “see” what we expect to see.

This subjective element also applies to cameras—especially digital ones. White balance algorithms adjust for the color temperature of light sources. Autoexposure systems attempt to average scenes for proper brightness. Face and scene detection uses machine learning to determine what should be in focus, or what counts as important. In both the brain and the camera, raw input is not the final image.

Color adds another layer of complexity. Human eyes perceive color through three types of cone cells, sensitive to red, green, and blue. But these perceptions are shaped by context and comparison. Similarly, cameras use Bayer filters and software interpolation to reconstruct color, often tweaking hues for visual appeal or fidelity to memory rather than physics.

In this sense, photography is not just a technical act—it is a translation. It translates light into form. Form into interpretation. Interpretation into memory. And with each layer, it asks us to consider: What am I really seeing? What has been filtered, framed, adjusted—or missed?

Understanding the science of seeing doesn’t rob photography of its magic. It grounds the magic. It reminds us that behind every image is a chain of decisions—by photons, lenses, processors, and minds—all working together to shape the visible world.

9. Photography as Scientific Method and Metaphor

Case studies in how images changed our understanding of nature

Photography does more than record discoveries—it often becomes the discovery itself. In science, seeing is not just believing; it is knowing. An image can confirm a hypothesis, falsify a theory, or inspire an entirely new line of inquiry. At its best, photography does what all science aims to do: clarify the structure of reality.

Historically, scientific photography has often revealed the invisible. The first X-ray image—of Wilhelm Röntgen’s wife’s hand—showed bones and a wedding ring beneath the skin. It was eerie, intimate, and revolutionary. The photographic plate didn’t just document—it proved that something unknown could now be seen, studied, and understood.

In biology, microscopy photography made microbial life visible for the first time. In physics, high-speed photography by Harold Edgerton caught a bullet piercing an apple and a drop of milk forming a crown—images that changed how scientists understood motion, shockwaves, and fluid dynamics. In astronomy, the Hubble Deep Field photograph, which revealed thousands of galaxies in a tiny patch of empty sky, expanded not only scientific data but human perspective.

Sometimes, it is the process of photography that mimics the scientific method. A camera observes systematically. It isolates a variable (time, focus, perspective), captures it under controlled conditions, and repeats. Each frame is a controlled experiment—especially in disciplines like time-lapse, astrophotography, or longitudinal studies, where image sequences become data sets.

But photography is not only scientific in method—it is also metaphorical. In language, we speak of “bringing things to light,” “developing a picture,” or “focusing on the details.” These are more than turns of phrase. They reflect the psychological and epistemological power of photography to shape how we think about thinking itself. Science often borrows this vocabulary to describe its process: illuminating truth, exposing assumptions, capturing a phenomenon in action.

Images also carry emotional weight that data tables cannot. A single photograph—of a melting glacier, a starving polar bear, a human embryo at eight weeks—can sway public opinion or inspire scientific funding. These images don’t just inform—they compel. They remind us that science is not separate from the human condition; it is intertwined with it.

Photography, then, becomes both microscope and mirror: a tool to examine the world, and a reflection of how we wish to see it. It lives at the edge of objectivity and subjectivity, method and meaning, evidence and interpretation. And at that edge, it tells stories that equations alone cannot.

10. The Ethical Lens – Truth, Manipulation, and Representation

The responsibility of seeing and showing in a world of deepfakes and filters

Every photograph is a choice. A choice of what to show, what to crop, what to light, and what to leave in shadow. Even in the most scientific of contexts, the image is not the world itself—it is a representation. And with representation comes responsibility.

From the earliest days of photography, the medium has worn the mask of objectivity. A photograph appears to be a mirror—faithful, unflinching, neutral. But we now know better. Images can be staged. Contexts can be stripped. And in the digital age, pixels can be altered in ways indistinguishable from reality.

The implications are vast. In journalism, an altered photo can mislead the public. In science, manipulated data images can lead to retracted studies and lost trust. In social media, airbrushed faces and artificial backdrops distort standards of beauty, identity, and authenticity. The ethical stakes have never been higher.

With the advent of deepfakes and AI-generated imagery, the very concept of photographic truth is under siege. Today, software can fabricate images of people who never existed, recreate events that never happened, or subtly distort real footage for persuasive effect. This blurs the line not just between truth and fiction—but between evidence and illusion.

In response, new ethical frameworks are emerging. Scientific journals now require clear documentation of image processing techniques. Photojournalism associations enforce strict codes of conduct. Forensic tools can analyze metadata and error levels to detect signs of tampering. And growing movements in both photography and science advocate for visual transparency—the open disclosure of how images are made, modified, and interpreted.

Still, ethical photography is not just about avoiding deception. It’s also about recognizing power. Who takes the image? Who controls the narrative? Who is being seen, and who is left out of the frame? These questions apply equally in war zones, laboratories, fashion magazines, and AI datasets. Representation is never neutral.

And then there is the quiet ethics of everyday photography. The ethics of photographing strangers. Of documenting suffering. Of posting images of others without consent. In an era where everyone is a photographer, these decisions are no longer reserved for professionals—they belong to all of us.

The camera may be a machine, but photography is a moral act. Every frame reflects not just the world, but the photographer’s stance within it. As our tools become more powerful, so too must our awareness of what we capture, what we share, and what stories we choose to tell—or not to tell.

11. The Future of Imaging – Quantum, Hyperspectral, and Beyond

What tomorrow’s lenses will show us—and how we’ll need to prepare

If the past two centuries of photography expanded the range of human vision, the next frontier may redefine vision itself. The future of imaging is not merely about seeing more—it’s about seeing differently: deeper, faster, smarter, and more meaningfully.

One of the most exciting areas of advancement is quantum imaging. Still in its infancy, this field uses the principles of quantum entanglement and photon correlation to generate images in extremely low-light conditions or to “see” objects around corners. In theory, quantum cameras could bypass traditional optical limitations, offering super-resolution without increasing physical sensor size—and imaging objects without them being directly illuminated.

Meanwhile, hyperspectral imaging is pushing beyond the familiar red-green-blue channels to capture hundreds of narrow wavelength bands across the electromagnetic spectrum. This allows for the detection of subtle material differences invisible to conventional cameras—such as early signs of disease in plants, chemical compositions in art conservation, or skin abnormalities in medical scans. Hyperspectral cameras are already used in agriculture, defense, and planetary exploration, and may soon find a place in everyday devices.

Real-time 3D imaging is another emerging domain, combining LiDAR (light detection and ranging), photogrammetry, and depth-sensing technologies. These systems are becoming smaller, cheaper, and more accurate, enabling applications in autonomous vehicles, robotic surgery, AR/VR, and even archaeology. We are not just photographing surfaces—we are digitizing space itself.

Then there is AI-generated imaging. As generative models like GANs and diffusion networks become more sophisticated, they may soon generate entirely realistic simulations of biological processes, space phenomena, or molecular interactions based on real-world data and predictive algorithms. This raises intriguing possibilities for science education and simulation—but also new concerns about synthetic evidence.

In healthcare, the convergence of bio-imaging, genetic mapping, and real-time diagnostics may allow for personalized, internal “photographs” of disease progression—combining data from MRI, PET scans, and cellular imaging into one coherent, predictive picture.

But as imaging becomes more advanced, so must our philosophy of vision. What will it mean to live in a world where every surface can be scanned, every person tracked, every structure rendered in high fidelity? Who controls the cameras? Who interprets the images? Who decides what gets seen, and what stays in the dark?

The future of imaging will not just be about resolution, color depth, or speed. It will be about ethics, interpretation, and purpose. The lens will become more powerful—but so must the eye behind it.

12. Conclusion – The Light Inside the Lens

Photography not just as observation, but illumination

We often think of photography as a way to look outward—to capture the world in a frame, to preserve what is. But at its deepest level, photography is also a way of looking inward. A way of seeing how we see. A way of illuminating not only the subject, but the observer.

The science of photography, like all science, is a story of refinement: sharper lenses, faster sensors, deeper wavelengths, smarter algorithms. But parallel to this technical evolution is a quieter journey—one that asks what we value, what we remember, what we choose to witness. Every image is a mirror, angled both at the object and at the mind behind the camera.

In the laboratory, the observatory, and the human heart, photography continues to evolve as both tool and language. It renders bone structure and brain activity. It reveals tectonic shifts in continents and climate. It captures the expression on a stranger’s face—and reminds us that data and emotion are not opposites, but layers of the same truth.

In an age overwhelmed by images—billions taken daily, many discarded, others weaponized—the challenge is no longer just how to photograph, but why. What will we use this power to reveal? To protect? To understand?

The lens is no longer merely glass. It is digital, quantum, ethical, and metaphorical. It belongs to satellites, scientists, artists, and ordinary people with phones in their hands. But in all cases, it still does what it has always done: bend light, focus thought, and make the unseen seen.

At its best, photography is not just a recording device. It is a way of paying attention. And in a world that desperately needs more clarity, more empathy, and more vision—that might be the most important science of all.